🚀 Uploading 1GB Files in the Browser with Next.js,

🚀 Uploading 1GB Files in the Browser with Next.js, AWS S3 & Presigned URLs — My Journey, Learnings & Gotchas

“What if I told you that uploading a 1GB file directly from your frontend can be done securely, efficiently, and reliably without melting your server?”

🌟 The story begins…

Not long ago, I decided to build a Next.js file uploader for large files (think: PDFs, ZIPs, videos). My goal?

Let the browser upload files directly to S3 with no heavy server load — and track the progress beautifully, even if the file is 1GB or more!

At first, it sounded easy — “just use S3 and a few APIs.”

But oh boy… I was wrong 😅

That’s where Multipart Uploads with Presigned URLs came to the rescue. Here’s the full story of how I built it, what I learned, and how you can do it too — the right way. 💪

📦 What is Multipart Upload? Why should you care?

When you upload a large file to S3, doing it in one go is risky — what if the network drops? Or the browser crashes?

Multipart upload solves this by:

- Splitting the file into small parts (e.g., 5MB–25MB)

- Uploading each part independently (even in parallel!)

- Then telling S3 to assemble them back together 💡

🔐 And what about Presigned URLs?

Presigned URLs let your frontend upload directly to S3 without exposing your AWS credentials. Think of it like a “temporary signed permission slip” that lasts for a few minutes.

🧰 Tech Stack

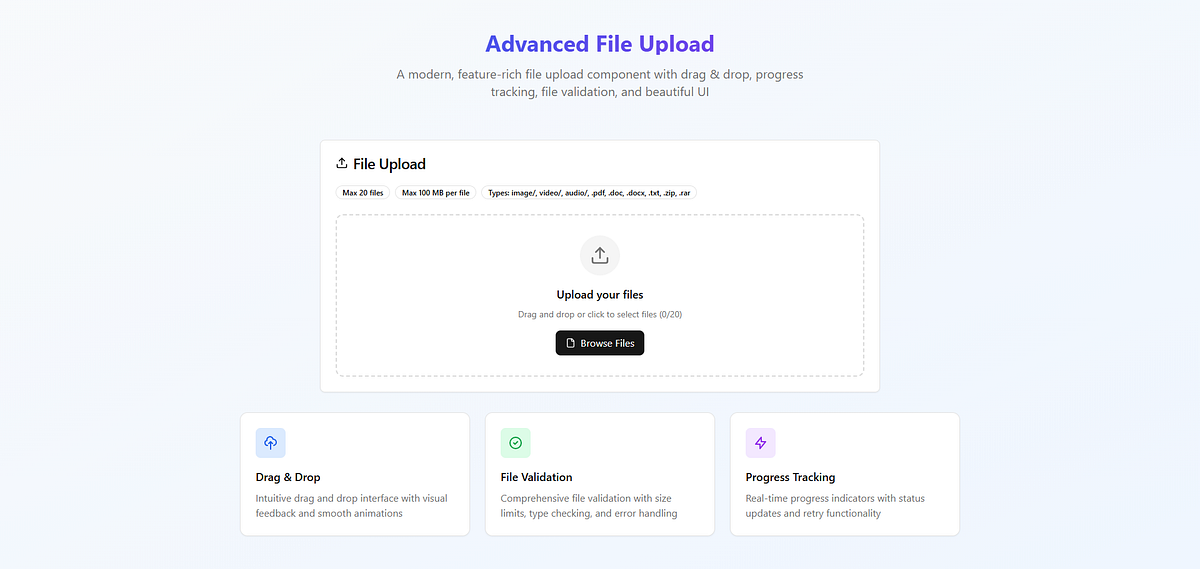

Layer Tech Framework Next.js (API Routes) Cloud AWS S3 Auth method Presigned URLs SDK used @aws-sdk/client-s3 UI Features Drag & drop, chunk progress, error handling

🪄 Step-by-Step: How I Built the Multipart Upload System

Here’s the 30,000ft view of what happens when you upload a file 👇

1️⃣ Frontend sends file info

POST /api/create-multipart-upload

→ Returns { uploadId, key }2️⃣ File is split into 100 equal chunks

I used JavaScript’s file.slice(start, end) to split the file.

You can configure chunk size or chunk count (e.g., 5MB or 100 parts).

3️⃣ Request Presigned URLs

POST /api/get-upload-urls

→ Returns signed PUT URLs for each part4️⃣ Upload chunks using fetch(PUT) + AWS Presign URL

Each chunk uploads to its respective URL with Content-Type: application/octet-stream.

🧐 Tip: You can upload parts in parallel to save time!

5️⃣ Complete the Upload

POST /api/complete-upload

→ AWS assembles all parts into a final fileDone. 🎉 You’ve uploaded a 1GB file without stressing your server at all!

💥 Real Challenges I Faced (and Solved!)

🤩 1. CORS Issues

Problem: Browser blocked my S3 uploads 😠

Fix: I configured the S3 bucket’s CORS policy like this:

[

{

"AllowedHeaders": [

"*"

],

"AllowedMethods": [

"PUT"

],

"AllowedOrigins": [

"http://localhost:3000",

"https://next-js-s3-multipart-file-upload.vercel.app/"

],

"ExposeHeaders": [

"ETag"

],

"MaxAgeSeconds": 3000

}

]For production, restrict AllowedOrigin to your frontend domain.

🤩 2. 2-Minute Timeouts on Uploads

Some parts were failing when the upload took >2 minutes

Fix: I reduced my chunk size to 10–20MB and limited parallel uploads to 3–5 at a time. This ensured better stability in low-bandwidth cases.

🤩 3. Tracking Progress Per Chunk

How do I show upload progress?

Solution: Each chunk upload returns after fetch(PUT).

I calculated the percentage like this:

progress = ((completedChunks / totalChunks) * 100).toFixed(2);🤩 4. Re-uploading Failed Parts

What if one part fails?

I built logic to retry failed uploads using a simple retry loop. In future, I plan to add:

- Pause/resume

- Abort upload

- Upload history with DB

📋 AWS Docs That Helped Me (Big Time)

📂 My GitHub Repo

👉 Next.Js-S3-Multipart-File-Upload

Includes:

/apiroutes: create, presign, complete, abort/utils: upload logic- UI: file picker, progress bar, error status

💡 Final Tips — From Me to You

- 🔒 Never upload files directly to your backend — let S3 handle it.

- 🧱 Always split big files — even 1GB is manageable with smart chunks.

- 🚫 Watch out for CORS and expired presigned URLs.

- 🚀 Use

@aws-sdk/client-s3instead of olderaws-sdk.

🤝 Wrapping Up

Building this was like solving a Rubik’s cube — tricky at first, but fun once you understand the layers.

Now I can upload gigabyte-sized files with progress tracking, parallel uploads, and 100% security 🔐

I hope this blog helps you skip the guesswork and build your own version faster and better.

Happy uploading! 🚀

Feel free to reach out to me on GitHub or LinkedIn if you have questions or want to collaborate!